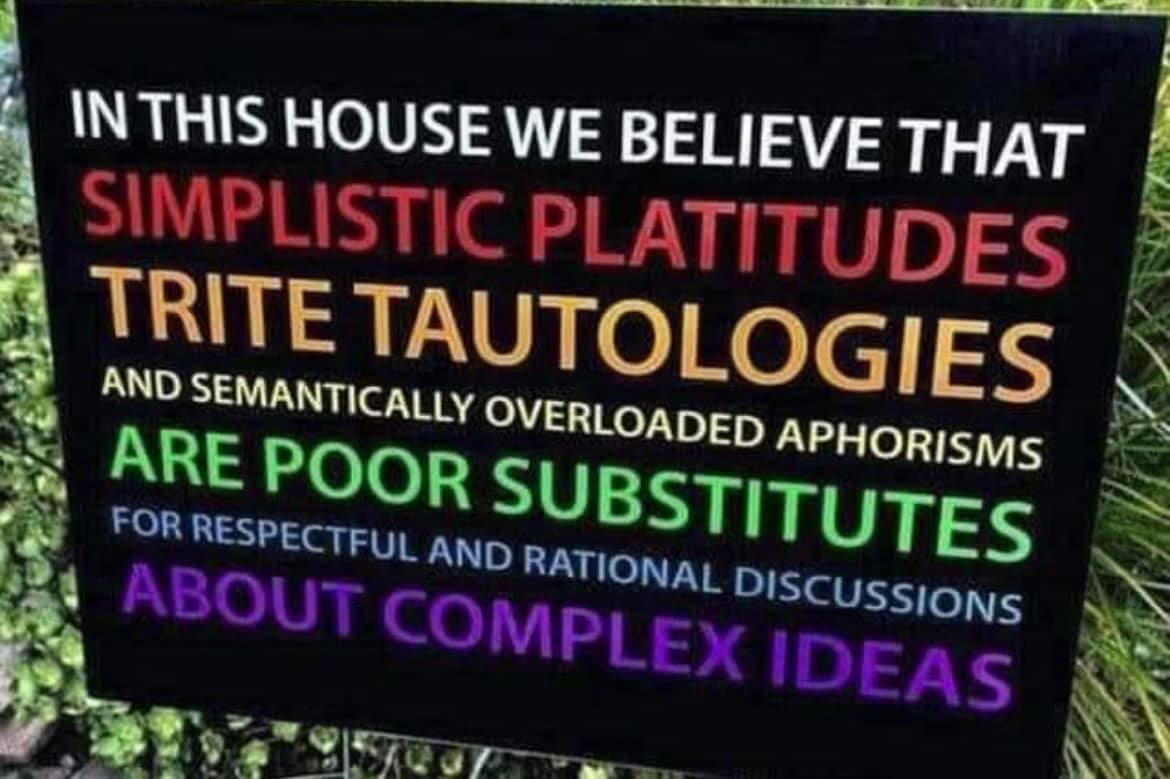

I believe in free speech and respectful debate

People have the right to be wrong. No matter how strongly you hold a belief, respect the humanity of those who disagree with you.

I believe in free speech and respectful debate

People have the right to be wrong. No matter how strongly you hold a belief, respect the humanity of those who disagree with you.

I find that the people who are most impressive or aspirational to me often strike me as assholes, and yet I do not therefore want to be an asshole.

I wonder whether their assholery is a necessary or approximately inevitable consequence of their success, a partial cause of my impressedness, or something else.

(Examples: John Wentworth, Sam Kriss)

Edit: confidence is part of the explanation but not the whole story i think

(I have not graduated yet, so this assumes my plans hold)

I feel like Opus 4.5 is honestly a psychohazard at this point the EQ is so high

4o was always such a turn off for me but messed up a bunch of other people

But I don’t feel the instinctive turn off from Opus in the same way which scares me

An AI investment bubble could burst soon, but that wouldn’t really change my view on the core questions: How hard is it to build AGI? How close are we to AI that transforms the economy and creates serious risks?

I actually hope the bubble does burst, because it would likely slow down the competitive race between AI companies and give us more time to prepare. But whether the bubble bursts or not will probably come down to (in the grand scheme of things) fairly small differences in AI capabilities over the next couple of years.

I was a little shocked to learn about the undecidable Post Correspondence Problem. But when phrased about the unrecognizability of the complement, it is a lot less shocking.

There is generally always a mismatch between our intuitive sense of what an idea means and any abstract formal way to define an intuitive concept.

I would like to clearly explain the reason for this mismatch and find a way forward by working to create a framework where intuitive human-native concepts can be discussed with appropriate degrees of precision.

There are two or three kinds of (related but distinct) intelligence: speed, and correctness.

Correctness might be divided into accuracy (how good ones predictions are) and precision (how many things one is able to predict).

It is possible to have a very fast intelligence which cannot understand many things but is very correct about the things it does understand; it is possible to have a very slow intelligence which understands most things well enough to make decent predictions but has lots of error, or any other combination of traits.

In practice, if you are slow and correct, you are often thought to be dumb, and if you are wrong and fast you are also thought to be dumb. These are different failure modes, however.